Database at scale - Introduction

What is database at scale and how do we approach it in different databases types.

In this series, we will explore the fundamentals of scaling databases. Here are the series' components.

Introduction - you’re here

Basic replication

Multiple masters

Sharding - Proxy

NoSQL CassandraDB/ScyllaDB

A database server is typically a remote endpoint that listens for new read or write requests, which can be made through different methods such as REST, native libraries, and others.

In typical database cluster setups, there is a single source of truth, a server that contains the data and handles read and write requests.

To ensure high availability in database deployment, you should have a minimum of three sources of truth so one can take over in the event of a failure of the others.

Terminology

When discussing databases at scale, it's essential to familiarize ourselves with some new terminology, which we’ll get to know in this part:

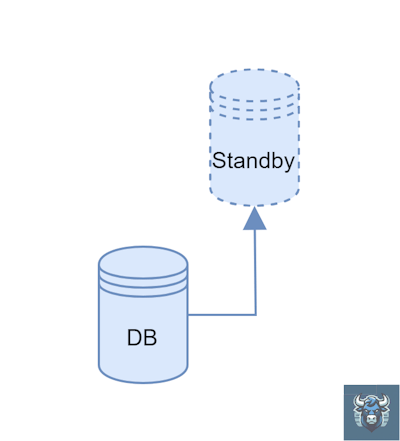

Replication

Databases are central to managing data, and storing all your data in one place can be risky. This is where replication comes into play.

Replication serves as a secondary source of truth that maintains identical information as the original source.

Standby Server

Any data written to the main DB server is replicated to the Standby server either synchronously or asynchronously.

The Standby server remains inactive. When and if the main DB server crashes the Standby server takes over and becomes the main DB server.

When the DB servers come back it becomes the new Standby server.

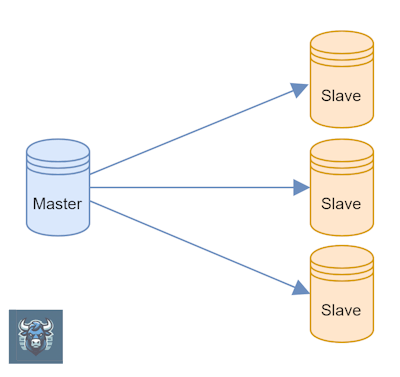

Master / Slave

A Master database server listens for incoming read and write requests, while a Slave database server listens for read requests only.

Any new data written to the master is replicated to the slaves using one of two methods:

Synchronously - data is committed to the master only when it can be inserted into all instances simultaneously, ensuring consistency at the expense of slower writing times.

Asynchronously - data is inserted into the master on the spot, with the update happening in the background. This approach will speed up writing times but with the cost of inconsistency.

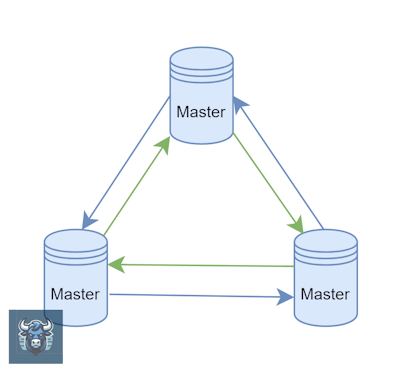

Multiple Masters

In this topology, each server also listens to write requests, unlike the previous one.

Any write request will be propagated to the rest of the servers synchronously or asynchronously.

The main advantage of this topology is that any endpoint can be used to write new data to the database, offering flexibility and convenience.

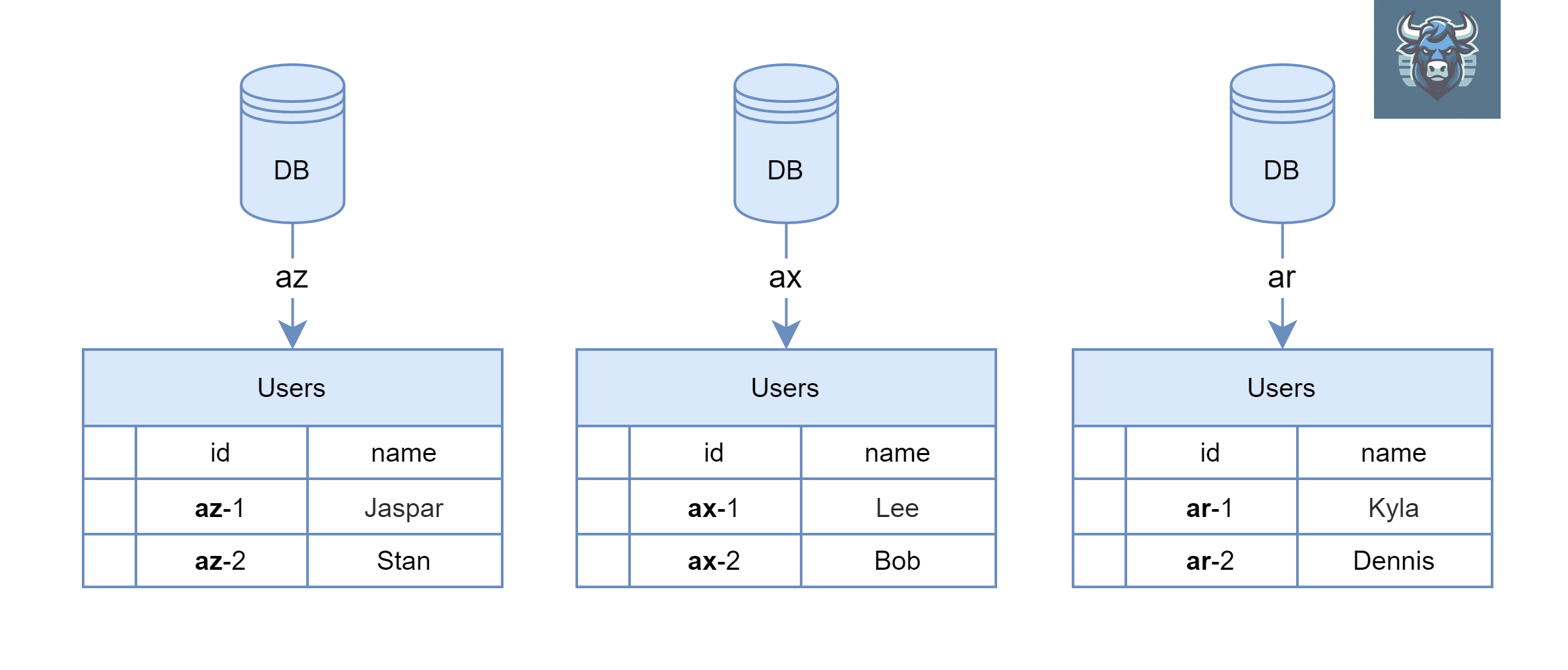

Sharding

All of the previously mentioned solutions provide redundancy but fail to address a crucial issue.

What happens if your data exceeds the capacity of one server? Even with multiple slaves or masters, each containing the same data, you can only scale your database's writing and reading capabilities, not its storage capacity.

To overcome this limitation, you can implement sharding.

When sharding, each new row is directed to a specific predetermined database server based on certain criteria.

In the example topology mentioned above, each database server contains identical tables with the same columns. For instance, when inserting an ID that starts with az, the data will be inserted into the first database server. Similarly, when reading an ID starting with ar, all the data will be fetched from the last database server.

Proxy

Except for the standby servers, you’ll need a method to access all of the servers as if they were one giant unit.

In master-master topology when all servers handle both requests a simple Level 4 load balancer can evenly distribute requests.

For master-slave and sharding topologies, you’ll need to have a proxy, You can set it programmatically using code or through a service proxy.

Code-level

For example, you can encapsulate your database connector with a code wrapper that directs requests to the appropriate database server.

In master-slave topology, you can place all the slaves behind a Level 4 load balancer and separate the request by reading or writing, for example, this pseudo code.

const masterIP = '10.0.0.15';

const loadBalancerIP = '10.0.0.60';

actionToServer(isWrite = false) {

let ip = loadBalancerIP;

if(isWrite) {

ip = masterIP;

}

return ip;

}

In a sharding topology, you would check the ID of the request to map it to the correct server. Here's an example:

const sharding = {

"aa": "192.168.1.4",

"ab": "192.168.1.5",

"ac": "192.168.1.6",

"ad": "192.168.1.7",

"az": "192.168.1.8"

}

function shardKeyToServer(id = 'aa') {

return sharding[id];

}

Service-proxy

Using a code-level proxy can be beneficial but may be more challenging to maintain. For this reason, a service proxy can be a useful alternative.

Two popular service proxies are:

Mixing

It’s common to use a mix of the above topologies, for example:

The first circle can be sharding topology.

Then, each sharding is deployed using a master-master topology.

Then, each of those masters has master-slave topology

NoSQL vs YesSQL

While the approaches mentioned above require significant effort, they are typically associated with Relational Databases (YesSQL), as NoSQL databases often come with these functionalities built-in.

The reason is due to YesSQL's main advantage, joins.

In YesSQL you don’t write any data twice - at least you should avoid it - instead, you’re creating foreign keys to reference data from other tables.

However, a challenge arises when you scale your database to multiple servers. For instance, if you want to join data from server 1 to server 2, each request to the database will multiply, especially with complex joins.

To address this issue in YesSQL databases, strict sharding is essential. This involves ensuring that all inserted data, including foreign key values, resides on the same physical server. However, achieving this strict sharding setup is not always achievable.

Going forward

In the upcoming sections of the series, we will delve into the fundamentals of deploying these kinds of topologies.

To make all the examples easy to understand in the coming articles we’ll use docker and docker-compose.