What?

Kubernetes are the industry standard for deploying scalable applications.

Kubernetes is shortened to

k8swhere the8mark the 8 characters between thekand thes

There are two general ways to use k8s:

Managed: The k8s cluster is already deployed for you, and you only need to manage the applications life-cycle. Offered by many companies including Amazon, Azure, Google, DigitalOcean, Linode and much more. You can find the whole official list in the CNCF landscape in the Certified Kubernetes - Hosted section.

Self-Managed: In this way you'll also need to deploy and manage the cluster yourself. For that you have a variety of distributions to choose from which can be found on the same link above in the Certified Kubernetes - Distribution section.

Application developers (CKAD) mostly go with a Managed solution, while Kubernetes Administrator (CKA) will go with last one, check here for more details about k8s certificates.

k3s is one of the official k8s distributions that meant to be a light weight version of k8s.

Due to its light-weight nature k3s was named like such as it meant to be half of k8s.

k8s has 10 characters so k3s is half, 5 characters and as such the 3 marked the middle 3 characters.

There's no long abbreviation for k3s.

Why?

k8s is a full-feature solution, meant to cover all use-cases. As of such k8s is a complex product, and the task of deploying k8s cluster makes it complex as well.

k3s is a much more lighter version, and deploying a basic k3s cluster takes less then a minute. Even complex deployment of k3s don't take more the 30-90 minutes.

k3s achieves that by providing light, stripped-out version of k8s that lack many of the large-scale features. Because of that you can have a single-node cluster of k3s. And, due to it's lightweight nature it will consume small amount of resources while letting you deploy apps in k8s style.

Although k3s lack some k8s features, it's a CNCF certified distribution of k8s, meaning you can use your yaml file against k3s and it will work!

One of the biggest advantages of starting using k3s in your environments is the that you're getting use to deploy application the k8s way. This way when changing to k8s, managed or self-managed, the only thing that changes is the cluster engine, and not the way you're interacting with it.

k3s vs k8s

The main difference of "k3s is lighter the k8s" doesn't give us that much, will try to break the differences a bit more.

| Feature | k3s | k8s |

| Cloud specific | None | Included |

| Security | Basic | Advance |

| Storage driver | Limited list | All of them |

| Auto scaling | Not available | Available |

| Processes | Single binary | Multiple binaries |

| Cluster info Driver | Sqlite, etcd, external SQL | etcd, external SQL |

These features makes k3s less robust then k8s, but much more suitable for other user cases as:

Edge computation

Home labs

Internal k8s clusters

Starting point to get use to

k8sAny scale of application that not uses k8s as of today

The last bullet-point is important, and why? leaving managed containers as ECS outside, most of today application will be deployed in one of the following approaches:

Traditional way: which you're installing and maintain the require infrastructure as the need grows. For example, when you suddnley have a need for NodeJS in your environment, you just install it.

Docker & Docker compose way: a single server with container management.

Docker Swarm: Docker orchestration tools.

The first approach is hard to maintain, while the second don't scale (easily at least) which leaves us with Docker Swarm.

Docker Swarm is a good approach for HA and FT applications, though in some point as your applications grows you'll find yourself in a need of k8s.

k3s will provide you deployment options that goes from a single server up to the HA cluster that shares the capabilities of Docker Swarm. Meaning, you can have the same benefits as you have today and starting to shift towards k8s-style deployments.

Deploying

Architecture

k3s can be deployed on a bare metal, VPS and even raspberry pie, in one of two ways as a k3s Server or as a k3s Agent.

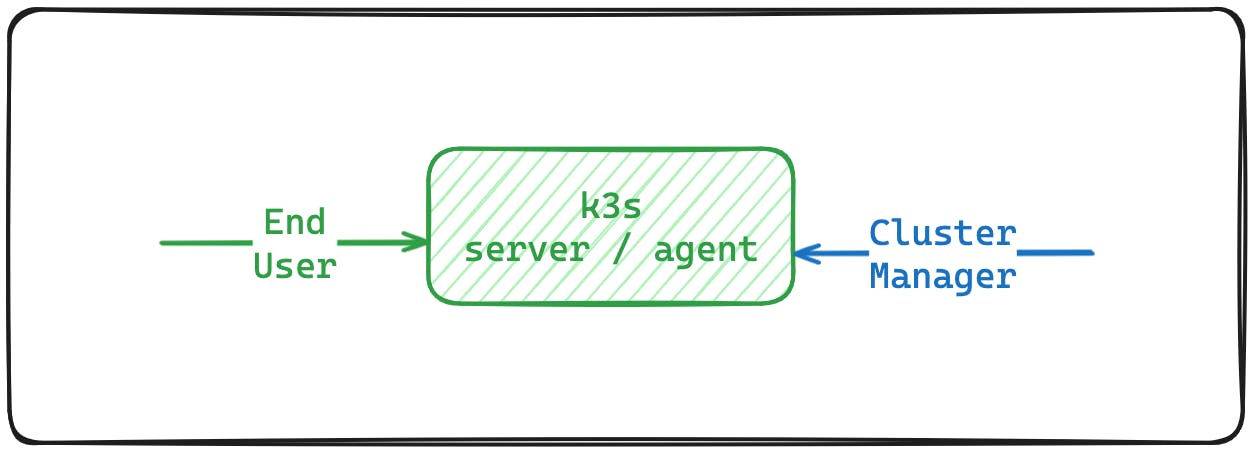

Image form k3s documentations.

k3s server includes additional tools for managing the cluster, while k3s agent includes only the necessary tools needed for running pods, services and other k8s resources, and a way to communicate with the k3s server(s), the k3s supervisor.

As can be seeing in the architecture, k3s always uses just one-binary to handle all the is components and tools.

By default k3s servers will be used as an agent for when deploying resources. We can block such behavior by tainting the k3s server nodes.

Tainting in k8s are a way to declare which resources can be deployed in a given node by declaring key value pair, for example:

kubectl taint nodes k3s-server1 taint=server:NoScheduleThis command will set the

taint=serverkey-value pair for thek3s-servernode. and any resource that needed to be able to deployed on that node will need to add toleration in their declaration for the key-value pair, for exampletolerations: - key: "taint" operator: "Equal" value: "server" effect: "NoSchedule"The

NoScheduleeffect declares that this taint rule are from now on and don't apply for running resources.

By default k3s comes with traefik as a built-in ingress controller. Make sure to target this ingress class in your yaml files. This feature - as almost everything in k3s - can be disabled.

We will cover the following deployments.

One k3s server

Three k3s servers for high availability

One k3s server and three k3s agents.

One k3s server with taint and three k3s agents.

Three k3s server with taint and three k3s agents.

One Server

Will start with the basics.

In this example will deploy a single-node k3s cluster.

We can see that in this deployment both the end-users and the cluster-managers that uses the kubectl will accesse the cluster the same way.

To deploy the cluster, ssh into your server and run:

curl -sfL https://get.k3s.io | sh -s - server

The command will fetch the content of https://get.k3s.io and will execute using sh. That's it, you now have a fully working k3s cluster.

You can get your config file from /etc/rancher/k3s/config.yaml and copy it to your local machine to use it with kubectl.

Three Servers

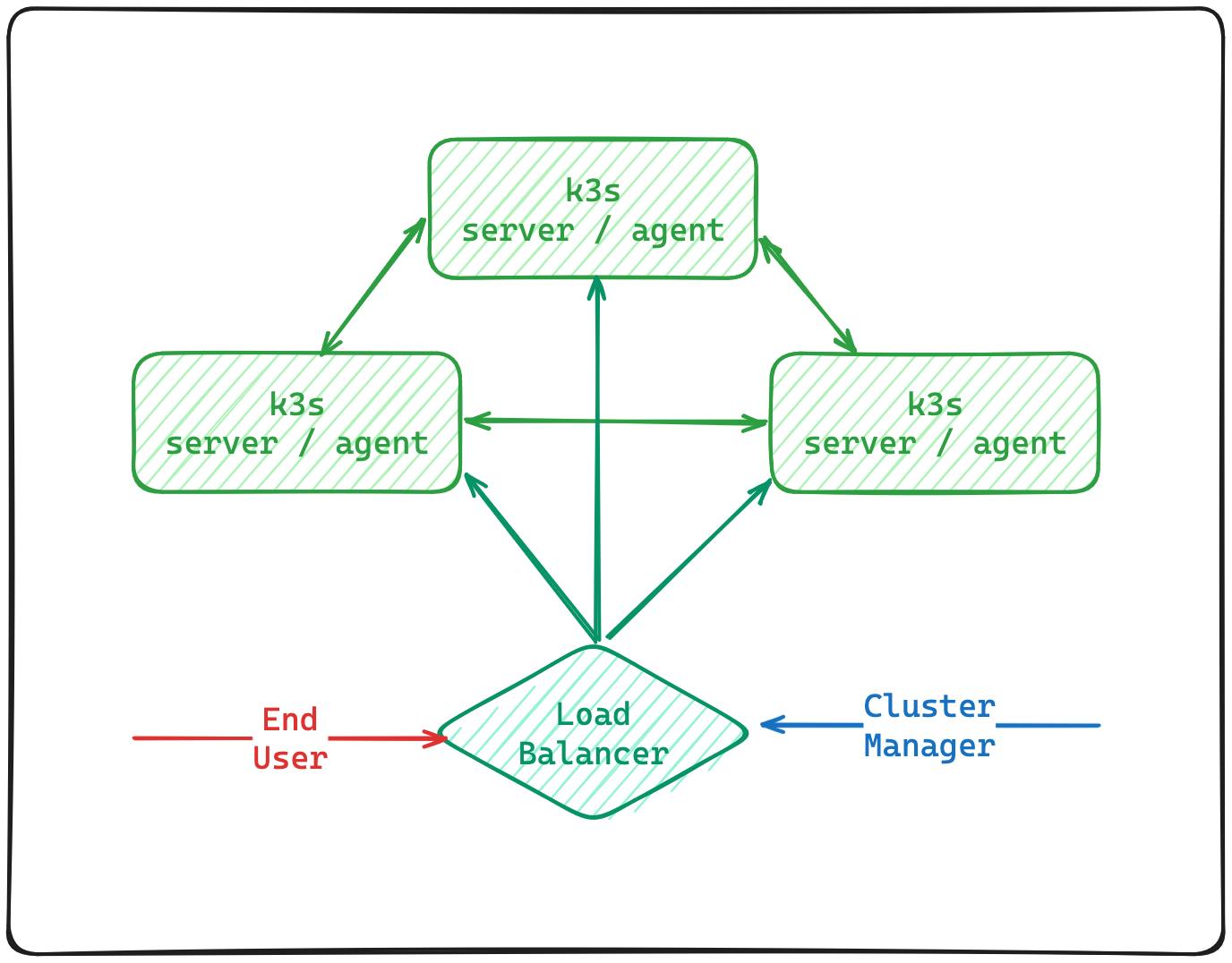

In this deployment we will achieve high-availability by deploying 3 servers into the cluster.

We're not deploying any agents yet as the servers are not taint and can be used as agents too.

In this deployment we will put all the servers behind load-balancer.

In is default settings k3s uses sqlite as is data-store, that won't work anymore as our cluster is now distributed. For distributed clusters k3s supports either using distributed etcd or using an external SQL db, will go with distributed etcd.

When using etcd the servers IP must be a local one, meaning all servers must share VPC and placed in the region. for cross-region cluster deployment you'll to need use the external db option.

ssh into the first server and run

curl -sfL https://get.k3s.io | sh -s - server --cluster-init

Using the --cluster-init flag will install k3s with etcd. (you can use this command also to convert existing single-node k3s from using sqlite to use etcd).

Now we need to have the k3s token. The token location is /var/lib/rancher/k3s/server/token and we can get as such,

$ cat /var/lib/rancher/k3s/server/token

K107df354fe86b....::server:THIS_IS_THE_TOKEN

The last part after the ::server: is the token, we will use that token and the existing server internal IP to join other servers as such:

curl -sfL https://get.k3s.io | sh -s - server \

--server https://10.0.0.3:6443 \

--token "THIS_IS_THE_TOKEN"

Now you can check all the nodes in the cluster as such:

:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3s-1 Ready control-plane,etcd,master 2m18s v1.29.3+k3s1

k3s-3 Ready control-plane,etcd,master 96s v1.29.3+k3s1

k3s-2 Ready control-plane,etcd,master 2m52s v1.29.3+k3s1

You can see that each of the nodes has the control-plane and etcd roles.

To access the cluster run your kubectl against the load balancer IP.

One Server three Agents

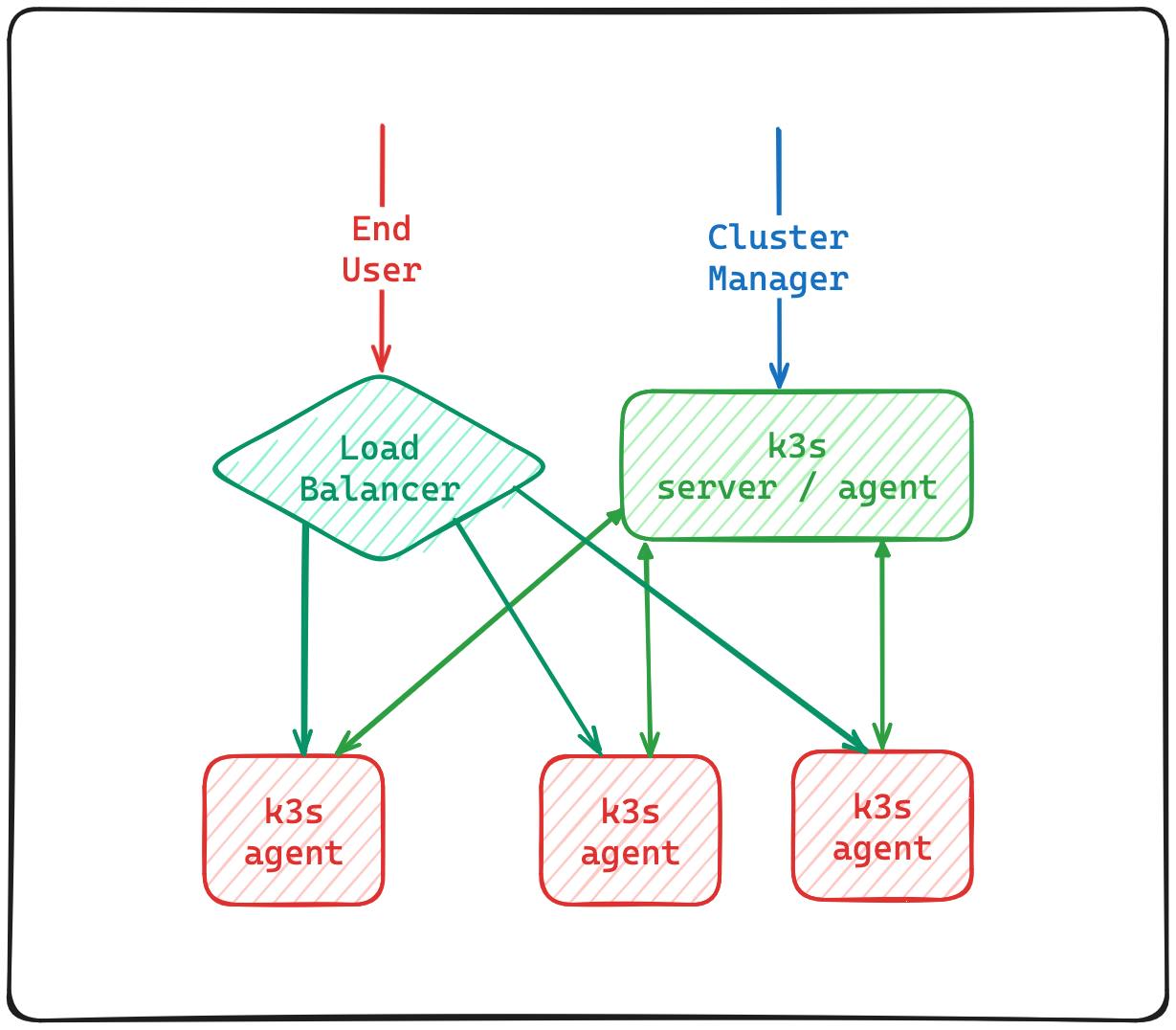

In this deployment we will deploy one server node using the default sqlite data-store and will connect 3 agents.

Because we haven't taint the server node it will be included in the load balancer target for the end-users.

In the server node will run

curl -sfL https://get.k3s.io | sh -s - server

That will create a single-node k3s cluster, now we can connect agents using the the server token and IP, as such:

curl -sfL https://get.k3s.io | sh -s - agent \

--server https://10.0.0.3:6443 \

--token "THIS_IS_THE_TOKEN"

Run this command in the server or in your local machine using kubectl

:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3s-1 Ready control-plane,master 3m22s v1.29.3+k3s1

k3s-3 Ready <none> 18s v1.29.3+k3s1

k3s-2 Ready <none> 11s v1.29.3+k3s1

k3s-4 Ready <none> 57s v1.29.3+k3s1

One Tainted Server three Agents

This deployment is the same as the one above, the only different is that we are going to taint the server node.

As you can see the load balancer is not pointing to server node anymore.

Run this command to taint the server node. (replace k3s-1 with your server node name.

kubectl taint nodes k3s-1 manager=only:NoExecute

Now we can check the node taints as such:

$#: kubectl describe nodes k3s-1 | grep Taint

Taints: manager=only:NoExecute

This will prevent placing any resources on the server node.

TheNoExecuteeffect means that current services will get evicted from the node.

Three Servers (tainted) three Agents

This would be the most complex deployment of them all.

In this deployment will have high-availability for the server nodes which are all going to be tainted. All of the server nodes will be behind a load-balancer which will be used by the agent nodes and the cluster manager.

First we run this to deploy single-node k3s cluster with etcd enabled.

curl -sfL https://get.k3s.io | sh -s - server --cluster-init

In Hetzner for example you can create a server without external IP whatsoever which would be very useful for the server nodes.

Now, we run this on the other servers.

curl -sfL https://get.k3s.io | sh -s - server \

--server https://10.0.0.2:6443 \

--token "THIS_IS_THE_TOKEN"

Now we can taint all of our servers by running

kubectl taint nodes k3s-server-1 k3s-server-2 k3s-server-3 manager=only:NoExecute

And we can add agents using the servers load balancer IP address

curl -sfL https://get.k3s.io | sh -s - agent \

--server https://10.0.0.6:6443 \

--token "THIS_IS_THE_TOKEN"

Now we just need to add another load balancer in-front of the agents.

Tips to go

To uninstall k3s from your server you'll have a ready to use file inside /usr/local/bin

# For server nodes

/usr/local/bin/k3s-uninstall.sh

# For agent nodes

/usr/local/bin/k3s-agent-uninstall.sh

It's recommend to first delete the node before uninstalling it, for example

# In a server node

$server: kubectl delete nodes agent-12

# In the agent node

$agent-12: /usr/local/bin/k3s-agent-uninstall.sh

To have a better grasp on your cluster practice how:

Add servers and agents.

Set alarms for when a node is down.

Backup and restore the etcd or sqlite cluster database.

Finally, use a firewall in-front of your cluster, specially in front of your server nodes.